Just a few months ago, an MIT paper by Andrew W. Lo and Jillian Ross discussed Generative AI and Financial Advice in a case study. It certainly made our industry sit up and take notice – “An LLM (Large Language Model) can role-play a financial advisor convincingly and often accurately for a client,” they wrote.

Even more concerningly the authors contended that AI can be trained to synthesise a personality that clients will find engaging, with the caveat “even the largest language model currently appears to lack the sense of responsibility and ethics required by law from a human financial advisor.”

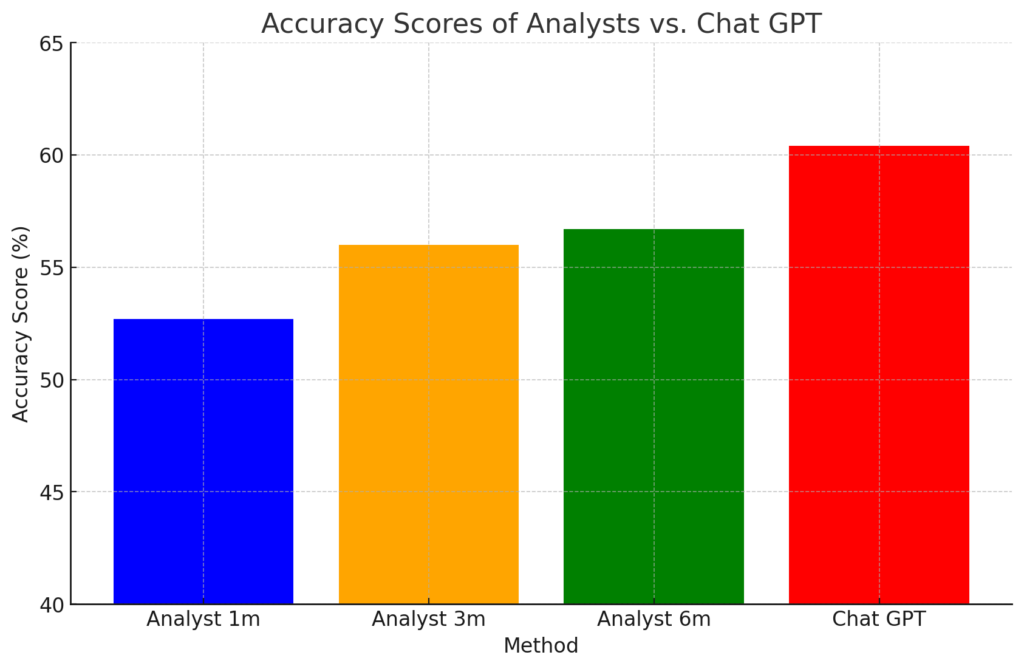

And now, it’s time for analysts to be alarmed. A brand new study from the University of Chicago Booth School of Business, researchers Alex G. Kim, Maximilian Muhn, and Valeri V. Nikolaev reveals that large language models (LLMs), specifically GPT-4, can outperform human financial analysts in predicting earnings changes from financial statements.

The report authors used Chat GPT-4 turbo for their research and tried to anonymize data as much as possible –their results found that the AI’s results accuracy was “remarkably higher than that achieved by the analysts.”

“Finally, we explore the economic usefulness of GPT’s forecasts by analyzing their value in predicting stock price movements,” they said in their study. “We find that the long-short strategy based on GPT forecasts outperforms the market and generates significant alphas and Sharpe ratios. For example, alpha in the Fama-French three-factor model exceeds 12% per year.”

But what does that mean for us?

The findings of this study suggest that we could see significant shift in the role of financial advisors in our lifetimes, making it important that we learn how to use the software to improve our offerings, rather than be replaced by it. Although studies show that our clients prefer advice from real people, that may not remain so forever.

And in fact, certain groups already trust AI more than financial professionals (yes, that’s you white males). That having been said, many may also want verification from a financial adviser after consulting AI. “What we learned, though, was most people who are consulting these resources are verifying what they hear with a financial advisor,” said Kevin Keller, CEO of the CFP Board to CNBC.

Here are key takeaways:

How did they test financial analysis accuracy?

The researchers employed a structured methodology to evaluate the effectiveness of Large Language Models (LLMs), specifically GPT-4, in performing financial statement analysis and predicting future earnings compared to human analysts and traditional machine learning models.

Here's a summary of their approach:

In conclusion, the methodology rigorously tested GPT-4's capability in financial statement analysis through anonymization, standardized data handling, prompt engineering, robust performance evaluation, and comparison with both human and machine benchmarks. The results showed that at least in this study, GPT-4 has the potential to outperform traditional financial analysts and specialized machine learning models in predicting future earnings.

It is still early days, and the tech is rapidly evolving, but as LLMs demonstrate their prowess in financial statement analysis, the financial advisory industry could stand on the brink of transformation.

Counting advisor moves in and out of firms requires some art as well as science.

“I'm just a big believer that based on demographics alone, we are looking at a 10-to-15 year bull market in M&A in the RIA and independent wealth space,” said Michael Belluomini, SVP of M&A at Carson Group.

As a tsunami of retirees comes crashing in, three-fifths of those surveyed believe – wrongly – that the federal safety net will cover their LTC needs.

Orion's latest update, a partnership with 11th.com, focuses on an underserved area of compliance for advisors and wealth firms.

The latest arrivals, including a 10-advisor ensemble from Ameriprise, bolster the firm's independent contractor and employee advisor channels.

Barely a decade old, registered index-linked annuities have quickly surged in popularity, thanks to their unique blend of protection and growth potential—an appealing option for investors looking to chart a steadier course through today's choppy market waters, says Myles Lambert, Brighthouse Financial.

How intelliflo aims to solve advisors' top tech headaches—without sacrificing the personal touch clients crave